8 Common SEO Issues (and How to Troubleshoot Them)

You work for months, even years to rank for relevant keywords on search engines. And that work is done with the knowledge that there is no guarantee that your rankings will climb or even just maintain.

It’s even more scary that you could end up losing years of consistent, hard work in seconds. How?

You can lose it all by not resolving SEO issues in time.

They might look small at first. Who cares about spammy referral traffic? You probably think every site suffers from it, especially those with better search results.

True.

But do you know that if you don’t handle it now, it could grow to a point when your Google Analytics data starts spiraling downward?

But, you do try to resolve it, only to realize that most fixes can’t be applied to historic data. Ugh!!

Perhaps today you don’t care about your site speed. It might be just fine with the current data. So, you don’t mind adding large 150kb image files.

But what happens after a couple of months of adding that much site cargo?

You might end up with a site that’s too bloated to load in even 5 seconds. That web design isn’t suitable for a positive user experience.

It’s easy to ignore issues and let them grow as your site grows. You’ve got a lot to do.

But, all those to-dos will suddenly seem unimportant when you see your site traffic in free fall and your bounce rate pinging through the roof.

Now that you’re already starting to worry about all the things that aren’t right with your SEO, let me show you how to fix 8 of the most common issues that you might be facing right now regarding web design and hosting issues.

1. Spammy traffic (Referral SPAM)

Have you ever noticed a site that has nothing to do with you and still sends a lot of traffic your way?

Perhaps you have. Because no matter how smart Google gets, spam traffic referrals still find their way into our Google Analytics reports.

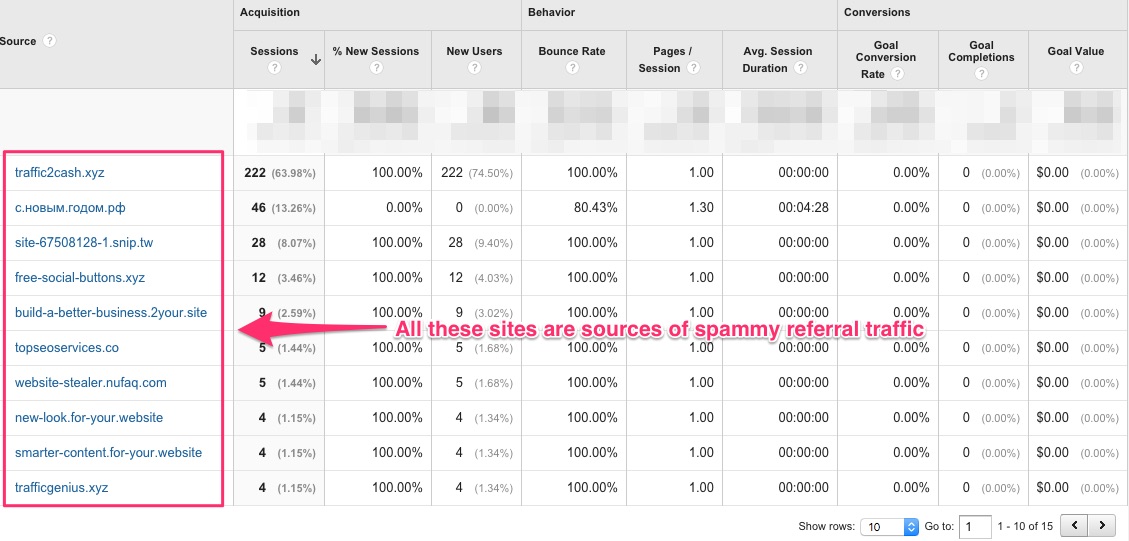

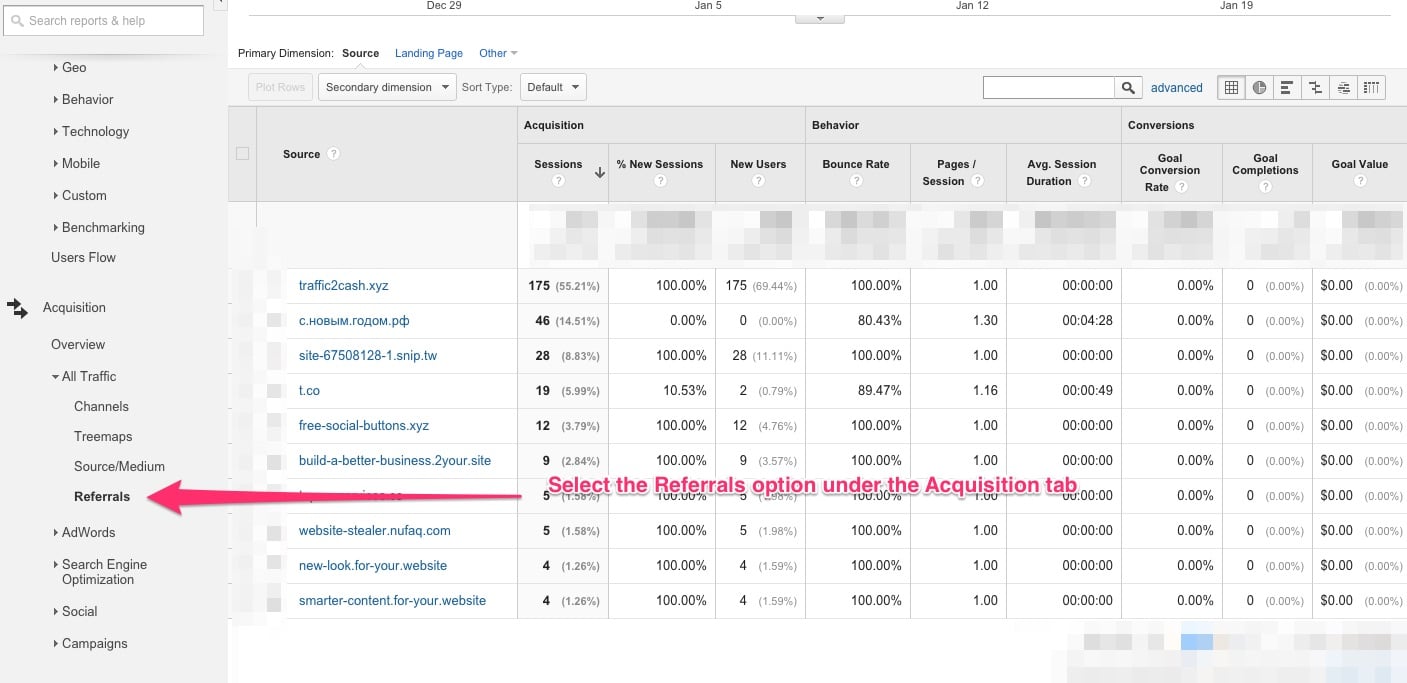

I have a screenshot here that shows several irrelevant traffic sources sending “visitors” to a site:

As you can see, the referred visitors don’t even stay on the site for a second and bounce back immediately.

All such traffic is spammy referral traffic.

And, it’s not just spammy looking domains that send this icky traffic. If you happen to see a high authority site (that doesn’t have any backlinks to your site) send you traffic, it’s almost always a case of spammy referral traffic. Any URL can be used as the referral source.

How do you know if a site is sending spammy traffic:

If you find a site sending you traffic without linking to you, it’s a spam referral.

The problem with spammy traffic is that these aren’t the visitors that you’d like to bring to your site. In most cases, these aren’t real visitors at all. These are bots of a spam webmaster tool, that hit your site and bounce back.

When spam bots fake traffic to your site and start getting reported in your Google Analytics data, they make your data inaccurate. You will also see your site’s average bounce rate become very high due to this bot-generated traffic.

What allows for such discrepancy?

Google Analytics has a bug that allows ghost referral spammers to send fake traffic to your site and show the traffic data in your analytics reports herunterladen.

2 ways to solve the spammy referral traffic problem:

Before you start fixing the spammy referral traffic, I have good news and not-so-good news. You can only block spammy referral traffic from showing up in your future Google Analytics data. There’s no way to clean historic data.

Also, the exercise of blocking traffic bots is ongoing. Every time you see a ghost referral, you will have to block it from showing up in your data so it can’t affect your search results.

Method#1: Block known bots

Google recognizes the spammy traffic issue and gives a webmaster tool option in Google Analytics that helps you block hits from known traffic bots.

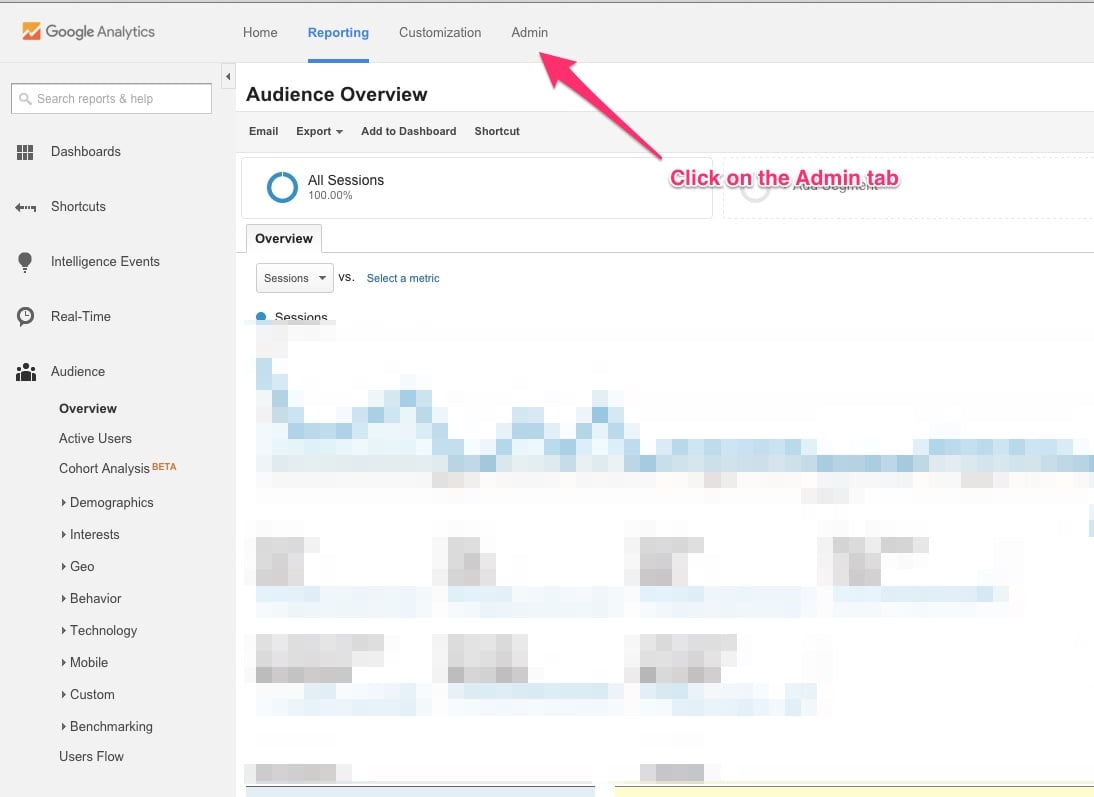

To find this option, click on the Admin tab after logging into your Google Analytics account.

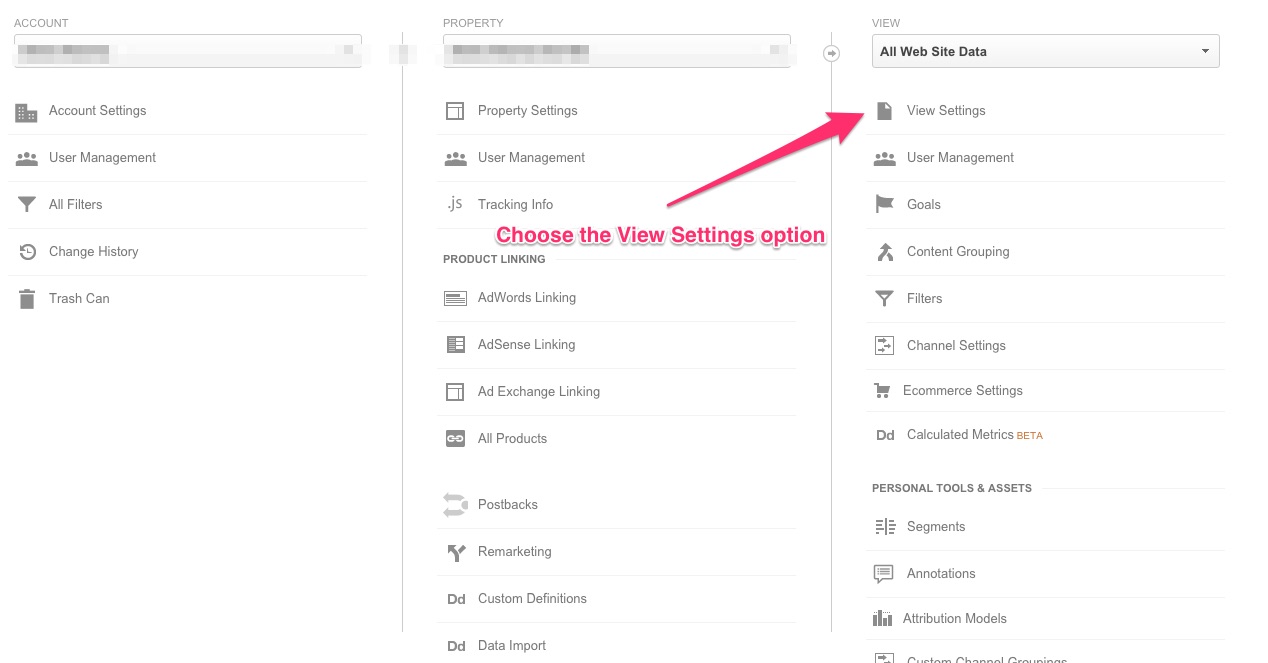

If you have multiple properties (sites) in your Google Analytics account, select the site you want to fix and click on the View Settings option.

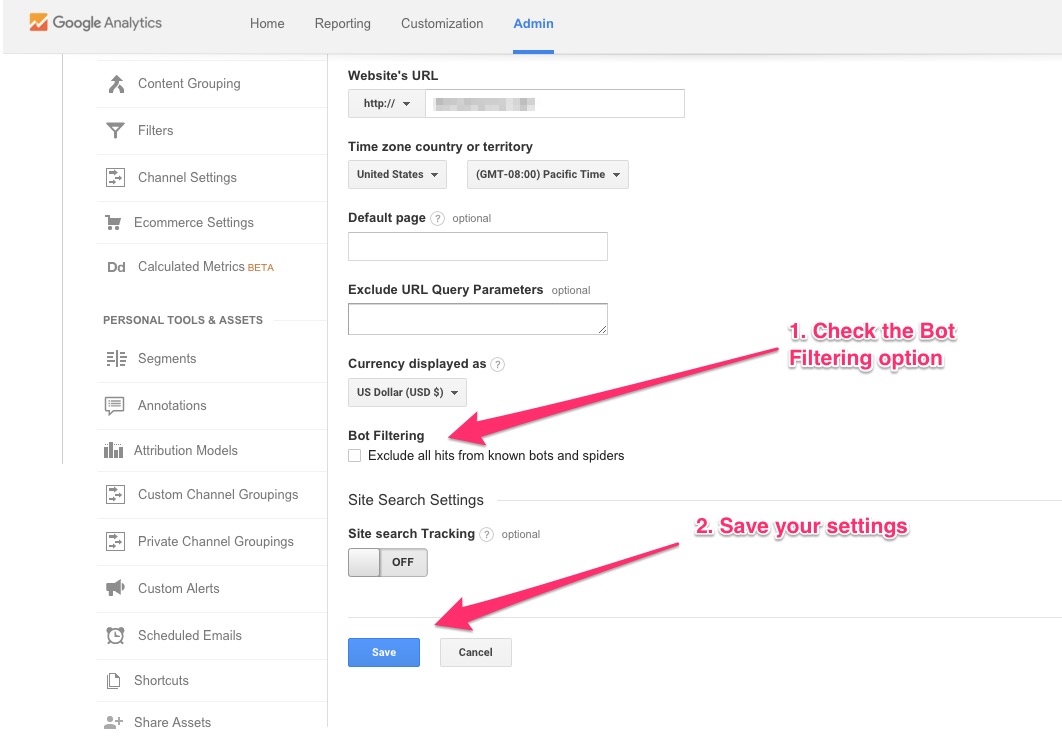

On the next page, you should see the option to “Exclude all hits from known bots and spiders.”

Check the option and save your new settings.

This option would be the best solution for the spammy traffic issue, if Google could keep up with the new and latest search bots.

While not 100% effective, using this option will save a lot of your Google Analytics data from the affects of fake traffic.

Method 2: Block spammy referring domains one by one

In this method, you will have to pick one spammy traffic referral at a time and block it.

To block a spammy referral, first select it by navigating to the Referrals option under the Acquisition tab.

In my case, I want to block the first domain, traffic2cash.xyz.

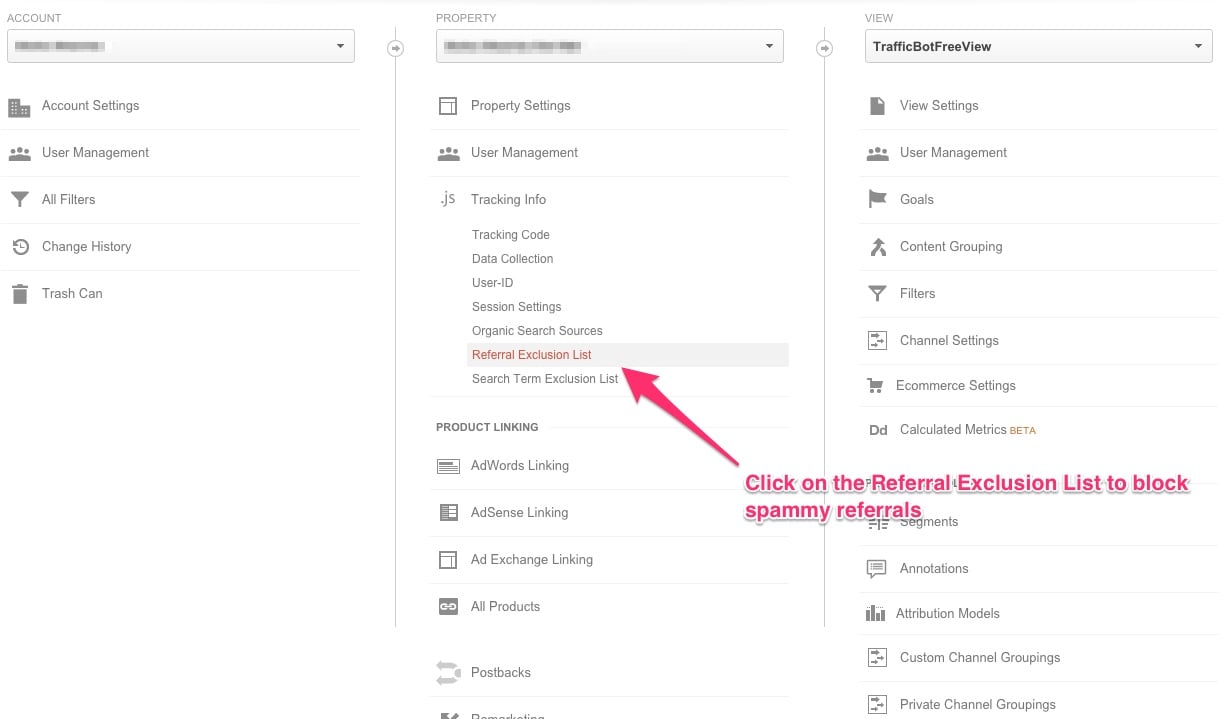

So, I copy it and head to the Admin tab. Under the second column which reads as “Property”, I click on the third option — that’s the Tracking Info option. I’m then going to choose the Referral Exclusion List.

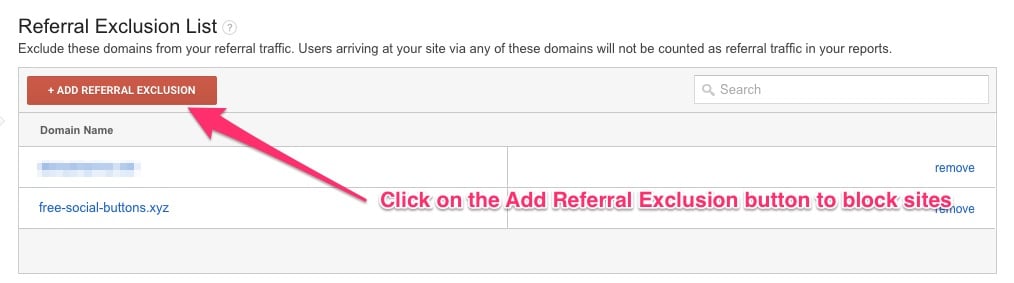

On the next screen, there’s a button that lets me add domains whose referred traffic I don’t want reported in my Google Analytics data.

You can follow the same method and add all of the spammy traffic referrals to this list and make your (future) data more accurate.

Another way to go about the spammy traffic referral problem is to create filters to exclude the spammy traffic referrals. The problem with filters is that if you don’t get the filter configuration right, you could end up causing irreversible damage to your data.

So, I suggest that you take care of this issue by using the Referral Exclusion List option.

2. Duplicate content

Have you heard about “Google’s penalty for duplicate content”?

I’m sure you have.

But there’s no such thing. In fact, Google understands that duplicate content happens all the time all over the net.

While Google doesn’t penalize sites for publishing duplicate content, it does punish the ones that do so to manipulate the search engine rankings.

You should still resolve all the duplicate content issues on your site to keep search engine results from spiralling.

Why?

Because when Google crawlers go to different URLs on your site and find identical information, you lose precious crawler cycles safari plus. Due to this, the crawlers won’t be left with enough resources to crawl over your freshly published content.

The most common reason for duplicate content issues is when multiple versions of the same URL offer the same content to the search engine bots.

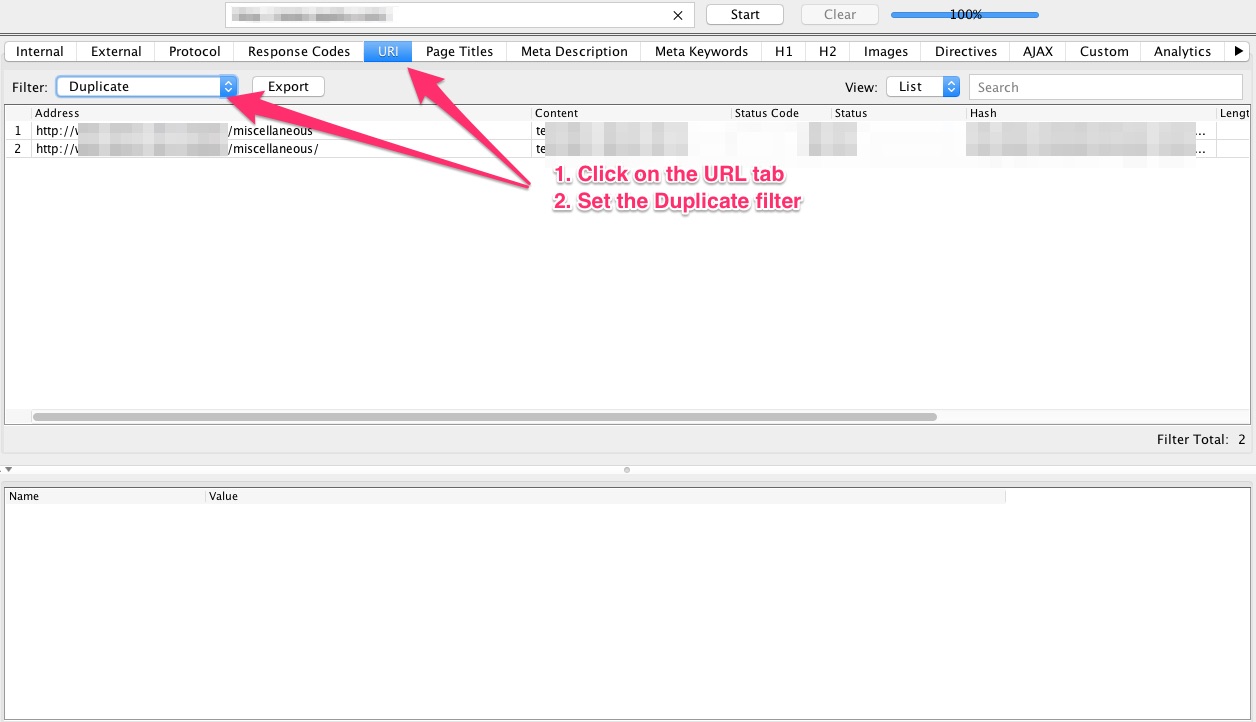

While there are several methods that can help you diagnose issues of duplicate content, I find the Screaming Frog tool to work the best when it comes to detecting issues caused by multiple URLs in your web design.

Screaming Frog crawls your site as a search engine bot and shows you your site exactly how the search engine bots see it.

To find duplicate content issues, download a copy of the Screaming Frog webmaster tool.

Once installed, enter your site’s URL and run the program.

As the crawling completes, click on the URL tab and select the Duplicate filter.

As you can see in the following screenshot, Screaming Frog fetches all of the different versions of the same URL from your site.

How to solve the duplicate content problem:

Method #1: Always mark your preferred URL

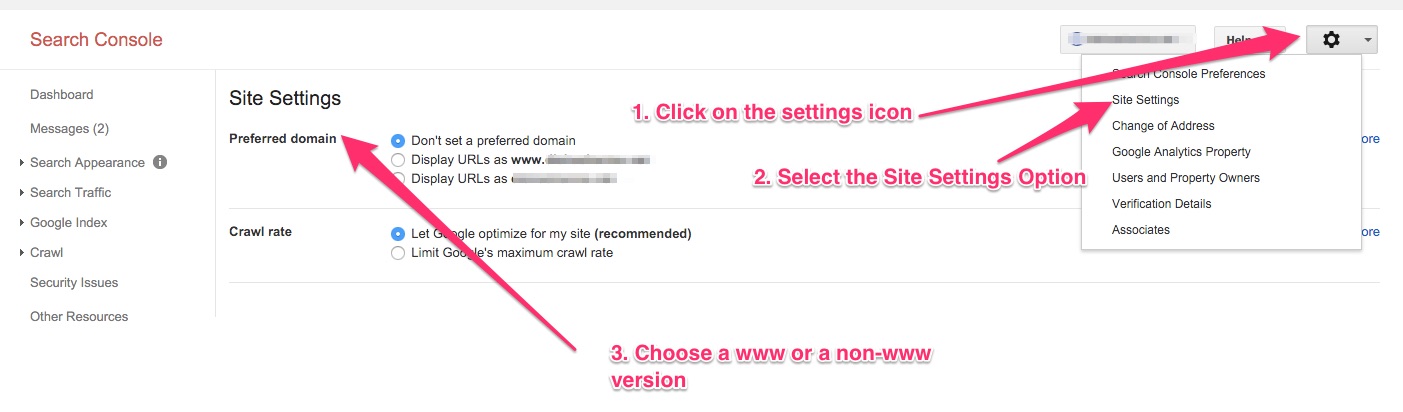

Set your preferred URL version (www v/s the non-www) in Google Webmasters.

If Google finds a site linking to a non-www version of your site while you have set your preferred version as the www one, Google will treat the linking URL as the www version.

So, choose the www or the non-www version of your site and set it in your Google Webmaster Tools.

To do so, login to your Webmasters Tools and click on the settings “gear” icon present on the top right. Now, choose the Site Settings option.

Next, select your preferred URL format.

You can also set up 301 redirects to alert Google and users about your preference.

Method #2: Use Rel=canonical tag

If you have the same content present on different URLs or if you’re worried that tracking parameters, backlinking sites, and general inconsistency while sharing links can cause duplicate content problems, you should employ the canonical tag.

When a search bot goes to a page and sees the canonical tag, it gets the link to the original resource. All the links to the duplicate page are counted as links to the original page. So, you don’t lose any SEO value from those links.

To add the canonical tag, you will have to add the following line of code to your resource’s original, as well as duplicate versions.

“<link rel=”canonical” href=”https://yoursite.com.com/category/resource”/>”

Here, “https://yoursite.com.com/category/resource” is the link to the original resource.

The canonical tag is placed in the head tag (meta description).

Method #3: Use Noindex tag

Using the NoIndex tag is another effective method to fight duplicate content issues.

When Google bots come across a page that has the Noindex tag, they don’t index that page.

If you use the Follow tag in addition to the NoIndex tag, you can further ensure that not only is the page NOT indexed, but also that Google doesn’t discount the value of the links to or from that page.

To add the NoIndex or Follow tags to a page, you can copy the following line of code and paste it inside your page’s head tag:

<Meta Name=”Robots” Content=”noindex,follow”>

The title tag and alt tag are not affected by this webmaster tool to better optimize crawl results.

3. Improper Schema

Schema markup helps Google deliver rich snippets.

However, due to Schema markup spamming, lots of SERPs just show rich snippets and no organic results appear.

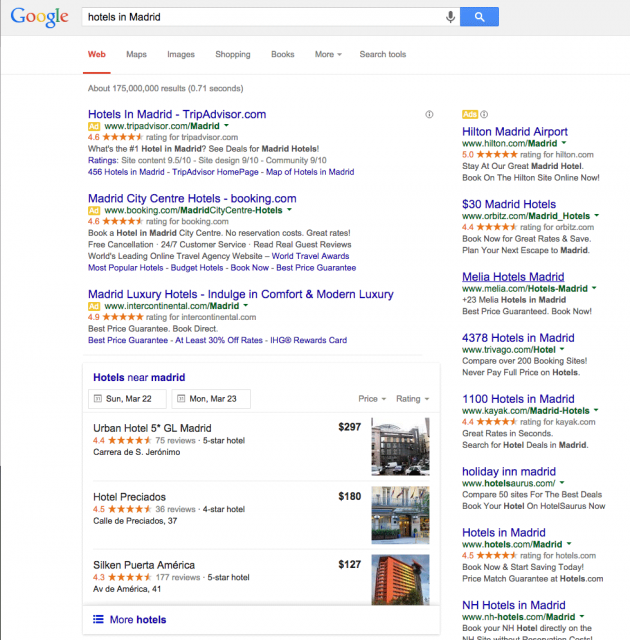

Barry Schwartz from SearchEngineLand discussed this issue when citing the quality of the search results while looking for the keyphrase, “hotels in Madrid” jibjab kostenlos downloaden.

He used the following screenshot to show how misuse (rather than overuse) of the schema markup leads to mediocre search results.

Do you see the problem?

As Google observed and Schwartz noted: Several hotels have misused Google’s rich snippets markup support to rank their hotels.

Google doesn’t call “hotels in Madrid” a specific item and so schema CAN’T be applied to it. Google is correct. It’s a simple search phrase and people aren’t necessarily looking for a certain hotel in Madrid that they’d like to see reviews and ratings for.

Applying schema to try and rank your landing page is a spammy on-page SEO practice.

Google clarifies this in its post about rich snippets for reviews and ratings:

Review and rating markup should be used to provide review and/or rating information about a specific item, not about a category or a list of items. For example, “hotels in Madrid”, “summer dresses”, or “cake recipes” are not specific items…

If you have implemented schema in your web design and find that Google is not returning your site’s rich snippets when you do a simple search, then Google has probably penalized your site for spammy schema.

Remember, on-page SEO is important but stuffing and inappropriate use usually is caught by Google algorithms.

If it’s indeed a case of Google penalty, you should see the corresponding message in Google Search Console.

How to solve the improper schema problem:

1. If you want to implement schema on your site, your first step should be to go through Google’s Structured Data implementation guidelines.

As you can see, Google is very specific about the instances where the Schema markup can be applied.

If you look at the above example, you can see that Google only supports Schema when it applies to a specific item and not generic key phrases.

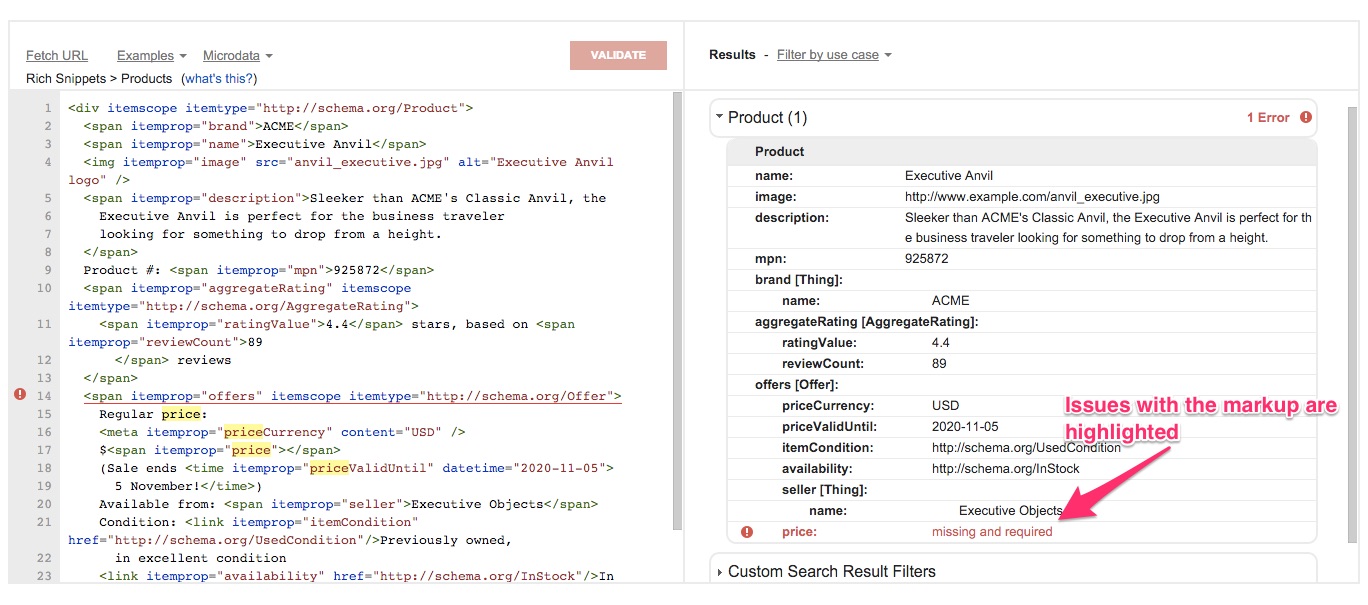

2. Run your code through Google’s “Structured Data Testing Tool”.

If you have implemented schema on your site, your next step should be to test it using Google’s tool for testing structured data.

To test your code, you can either input the page’s URL on which you have applied schema, or you can directly copy and paste the code.

Structured Data Testing Tool highlights all of the errors in your code.

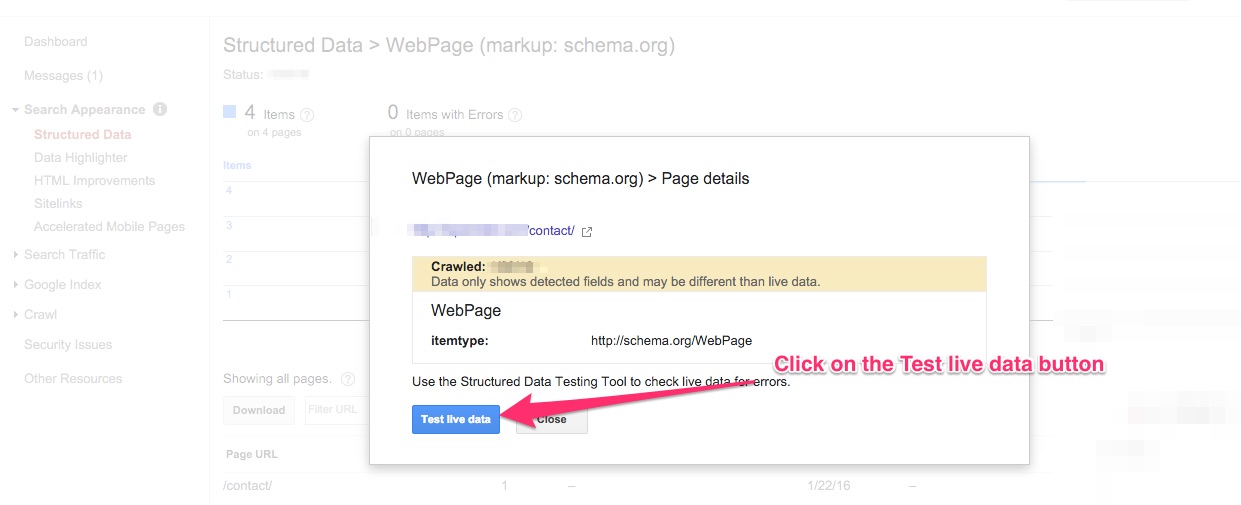

3. Look for issues in Google Search Console.

If something’s wrong with your structured data, you should see alert messages in your Webmasters account.

In fact, you can directly test your site’s schema (in Google’s structured data testing tool) by clicking on any item on the Structured data page in Webmasters and hitting the Test live data button.

Doing so will take you directly to Google’s tool for validating structured data.

4. Sudden dips in traffic

Dips in traffic don’t necessarily mean a problem.

Sometimes, it’s a just seasonal or day-of-week effect. You might have noticed that your traffic is somewhat slow on weekends or during the summer.

The dips that should raise flags are the steep ones skaten. You should be able to spot such dramatic lows when you are looking at your Google Analytics traffic report.

To confirm if it’s not an expected dip that I just discussed, you should compare your data to the data of the same period during the last few weeks, month or year. If you see a pattern, instead of worrying, you should start investigating the possible causes.

If you have been following my traffic updates on my personal blog, you will see that I reported a dip in traffic during the holiday season. But, that was expected.

If it’s not a seasonal dip and your traffic has indeed flatlined, it means that you have messed up with your analytics tracking code. Or, perhaps you installed an additional analytics software that’s not playing well with Google Analytics.

Such a dip could also happen if you change your CMS theme or web design template and forget to add the Google Analytics script in your new setup.

The low traffic could also be a result of Google penalizing your site. Remember that it’s not necessary that you find an algorithm update around the time that you find the discrepancy in your data as Google is constantly rolling out updates.

How to inspect sudden low traffic instances

1. First things first: See if your site has been penalized by Google.

To do so, search for an email from Google. You should also see alert messages about the penalty in Google Search Console. You can find them by clicking on the Search Traffic item and choosing the manual actions option.

Once you have confirmed that you have been hit by a Google penalty, you should fix the issue quickly and re-submit your site to the Google team.

These are some reconsideration request tips straight from Matt Cutts:

2. If you have redesigned your site or changed your theme or web design template, see if you have forgotten to copy the Google Analytics script in the new theme or template.

3. Also, check if you have changed your default URL structure. If you haven’t setup or mis-configured 301s, you could see a high loss in traffic.

4. Confirm if your site got hacked.

5. If none of the above look like the real cause, perhaps your site has been a victim of negative SEO.

The following items are the hallmark of a negative SEO attack:

- Getting linked to by several spammy sites using spammy anchor texts

- Having your reputation sabotaged on social media (often by fake profiles)

- Removal of valuable backlinks

The best protection against negative SEO is to stay alert. Choose to receive alert notifications from Google Webmasters. Monitor your social media mentions and keep a close eye on quality backlinks.

5. Outdated sitemaps

You already know that search engines love sitemaps. Sitemaps help search engines in understanding your site structure and also in discovering its links for crawling.

But, as a site matures, lots of changes get introduced. A site can undergo a complete restructuring, including URL restructuring. Or, maybe some sections of the site get removed permanently herunterladen.

If a sitemap is not updated, all of your major site updates will not reflect in it. And, therefore, search engines might come across broken links or end up crawling irrelevant sections of your site.

To avoid this, you should either create an updated sitemap and re-submit it via Google Search Console. You can also use a dynamic sitemap generator (one that keeps getting updated as you make changes to your site).

Google has recommended a list of tools to help you generate XML sitemaps. There are several free and paid options. You can choose any of them and create a refreshed sitemap to re-submit to Google’s search engine algorithms.

6. Wrong use of UTM parameters

Do you use UTM parameters like the campaign source, name, and medium to measure your marketing ROI?

I’m sure you do.

But, do you know these UTM parameters are not meant for tracking internal links?

I have seen so many people use UTM parameters to track the performance of on-page marketing items like promo bars, sidebars and banners that link to different parts of their sites.

Example:

Suppose a person lands on your blog through Google search.

Then, he or she navigates to the store section of your site using the Shop menu item on your site’s top navigation.

If you track internal links with UTM parameters, you might be using a link like the following to track the clicks on the Shop menu item:

http://shop.mysite.com/?utm_source=homepage&utm_name=headermenu

When Google Analytics reports the source of this visitor, it will report “homepage” and not “Google Search or Organic”.

When you use UTM parameters for tracking internal links, you overwrite the real data. Like in the above case, you misrecorded this visitor as coming through the homepage.

Over time, the wrong use of UTM parameters can ruin your raw data.

Instead of using UTM parameters for tracking internal links (clicks), you can use events that are triggered by specific user actions.

How events work:

To define an event, you need to add a small code to every action using the onclick attribute.

Sitepoint explains this as, “The onclick event handler captures a click event from the users’ mouse button on the element to which the onclick attribute is applied.”

Simply put, you can create an event on all the clickable elements on your page using the onclick function.

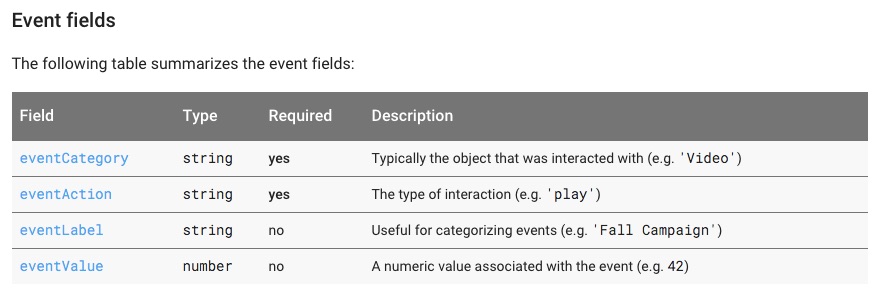

Here’s Google’s syntax code to trigger an event:

ga(‘send’, ‘event’, [eventCategory], [eventAction], [eventLabel], [eventValue], [fieldsObject]);

As you can see in the above screenshot, the category and action fields are mandatory. They are enough for tracking clicks on elements like buttons, navigation labels (menu items), banners and so on.

The category field will contain the object that was clicked upon, such as a top menubar item.

The event field, on the other hand, will list the action that was performed — like if a video was played or a resource downloaded or simply a button clicked.

For our example, let’s say that the top menubar item got clicked. So, the action is click.

Our event tracking code becomes:

ga(‘send’, ‘event’, TopMenuItem1, ‘click’);

Now, all that you need to do is to go back to the link you want to track. In our case, it’s the link that’s added to the top menu bar’s first item. To this link, we need to add the above code.

Suppose the first top menu bar item linked to http://www.yoursite.com.com/home. You’ll now have to add the snippet inside its anchor tag:

<a href=”http://www.yoursite.com.com/home” onClick=”ga(‘send’, ‘event’, TopMenuItem1, ‘click’);”> Home</a>

Tracking how users interact with your site will be easy if you have a simple HTML site as you can easily find the relevant links in your code and make the changes asphalt 8 for free.

However, if you use a CMS, tracking clicks can be challenging. I tried to look for a plug and play solution for you, but I couldn’t find one I trusted. You will need a developer’s help to configure it correctly.

The same goes for tracking clicks on other items.

But, here’s where heatmap tools like CrazyEgg can be very helpful. Along with all the other things these tools can do, they show you the different elements on your site that get clicked.

If you don’t stop tracking internal clicks using UTM parameters, you’ll end up trashing your Google Analytics data.

7. Speed issues

In 2010, Google officially included speed — a page’s loading time — as a factor in ranking. Search engines penalize slow loading web designs and pages.

In its official blog post about making page speed a ranking factor, Google said that speed doesn’t just improve user experience, but also stated that its studies have shown that making a site fast also brings down a site’s operational costs.

No matter how much you optimize your site, there’s always opportunities to do better.

Before I show you how to make your site speed faster, I’d like you to tell you about a few tools you can try to analyze your site performance with regard to speed.

Once you know your site’s current status, you can try following the speed tips one by one and see how each of them improves your site speed.

Tools to test a site’s speed:

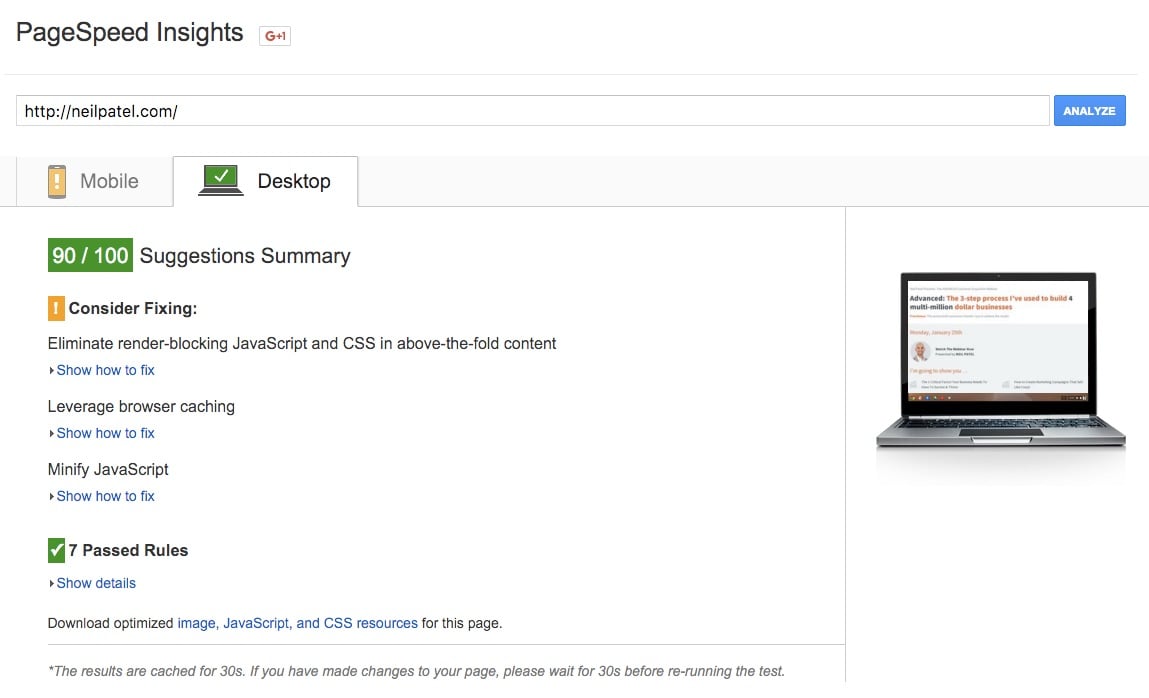

Page Speed – This is Google’s tool to help site owners measure the performance of their sites. In addition to speed statistics, Page Speed also gives insights that site owners can use to improve their sites’ loading times giving them positive search engine juice.

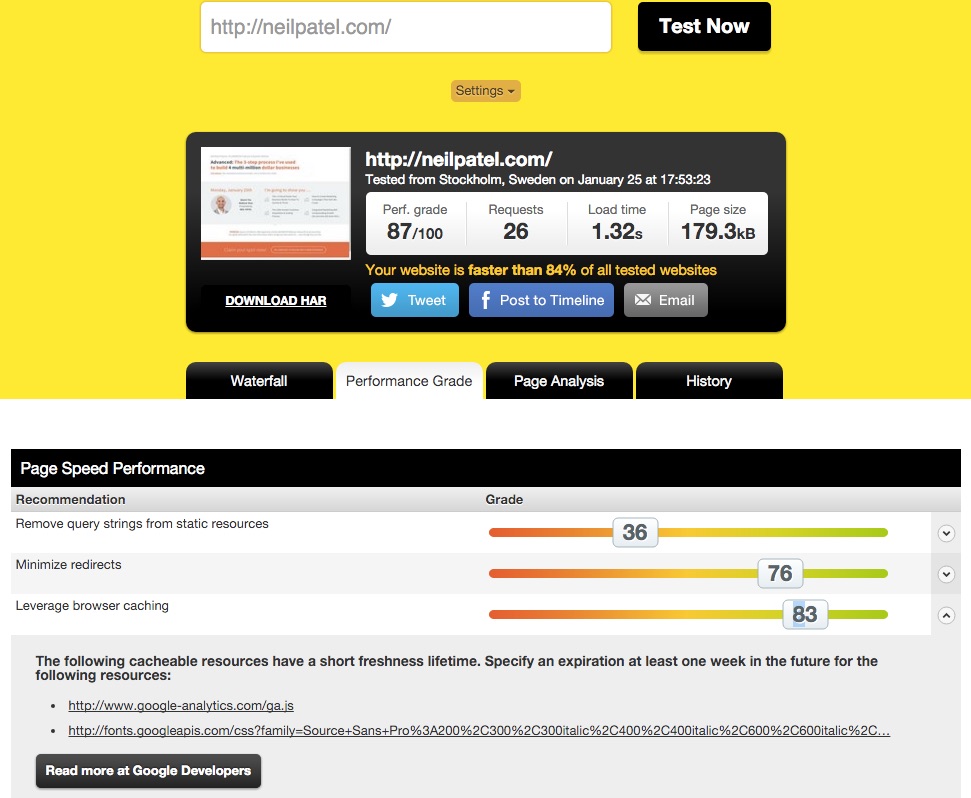

Pingdom Website Speed Test: Just like Page Speed, the Pingdom Website Speed Test tool also lets you measure your site’s performance and gives you actionable tips to optimize it.

As you can see in the above screenshot, the tool is asking me to set the expiration of some non-regularly updated items to at least a week in the future.

When you run your site through this tool, it will give you similar, specific and actionable items.

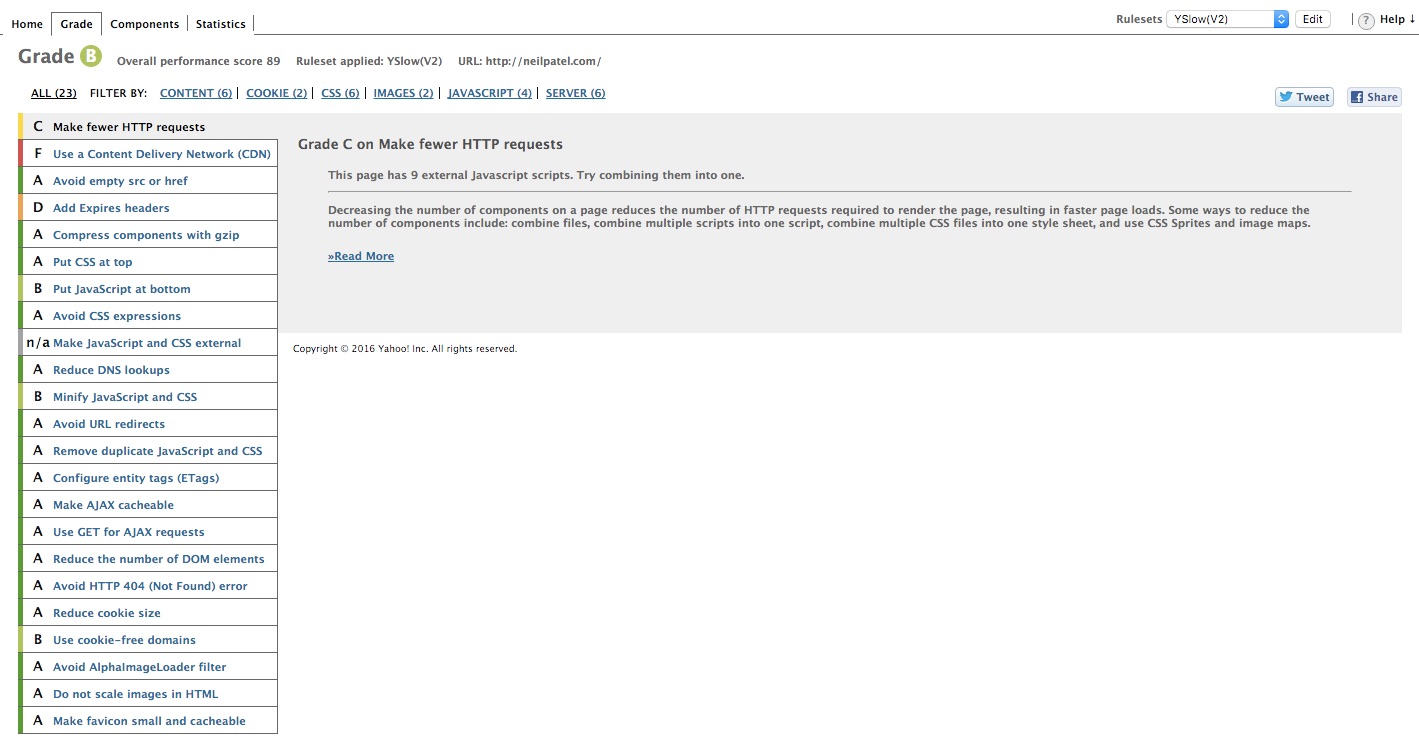

YSlow – Yslow is another page performance test tool that shows how your site performs in different performance criteria as defined by Yahoo.

To test your site using YSlow, you’ll be required to install its addon to your web browser. After getting the addon, just go to your site and initiate the addon.

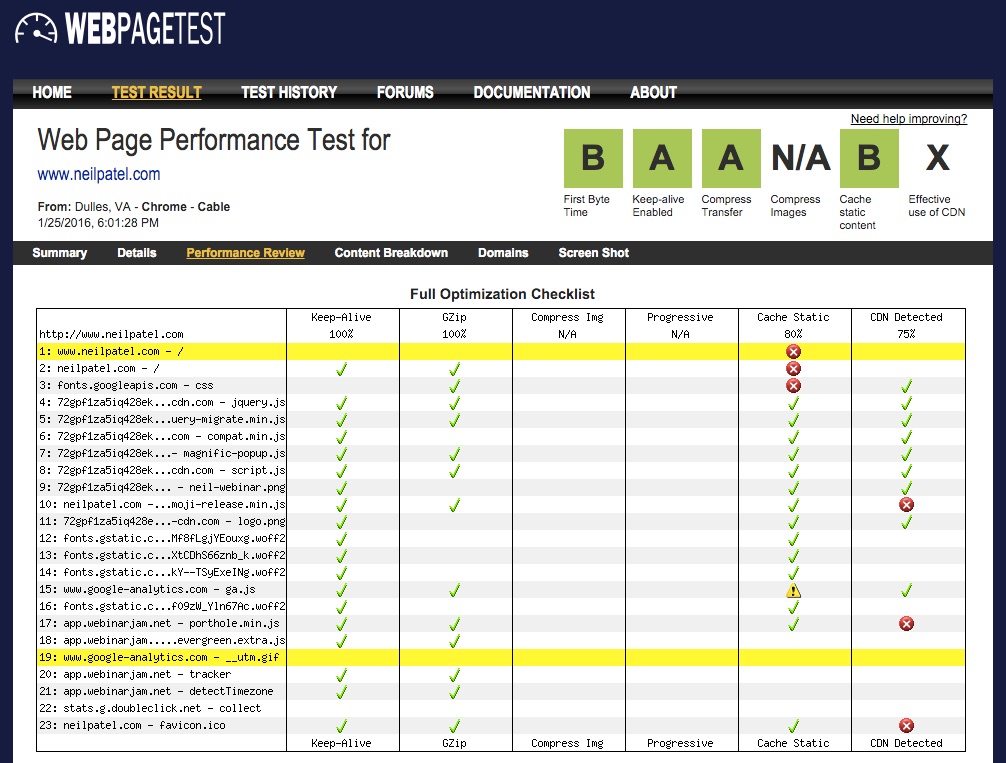

WebPageTest – The WebPageTest tool also measures your site’s speed performance.

Along with the important speed metrics, the WebPageTest tool also produces an optimization checklist listing all of the items that are configured correctly, as well as those that are missing. Resolving these could make your site more speedy.

Before starting to optimize your site, it helps to know where you’re starting according to search engines. So run your site through some of these tools and note how your sites performs in them before you apply any changes.

Tips to improve your site speed:

Tip #1 – Optimize images: Nothing kills a site’s speed more than heavy images dr fone download kostenlos. To prevent images from bloating your site, keep them under 100kb.

Also, use the jpeg format. Use the png format only when you absolutely need a transparent background.

These two image optimization tips can work great to keep your site fast. But, if you have many huge images on your site, you might want to optimize them.

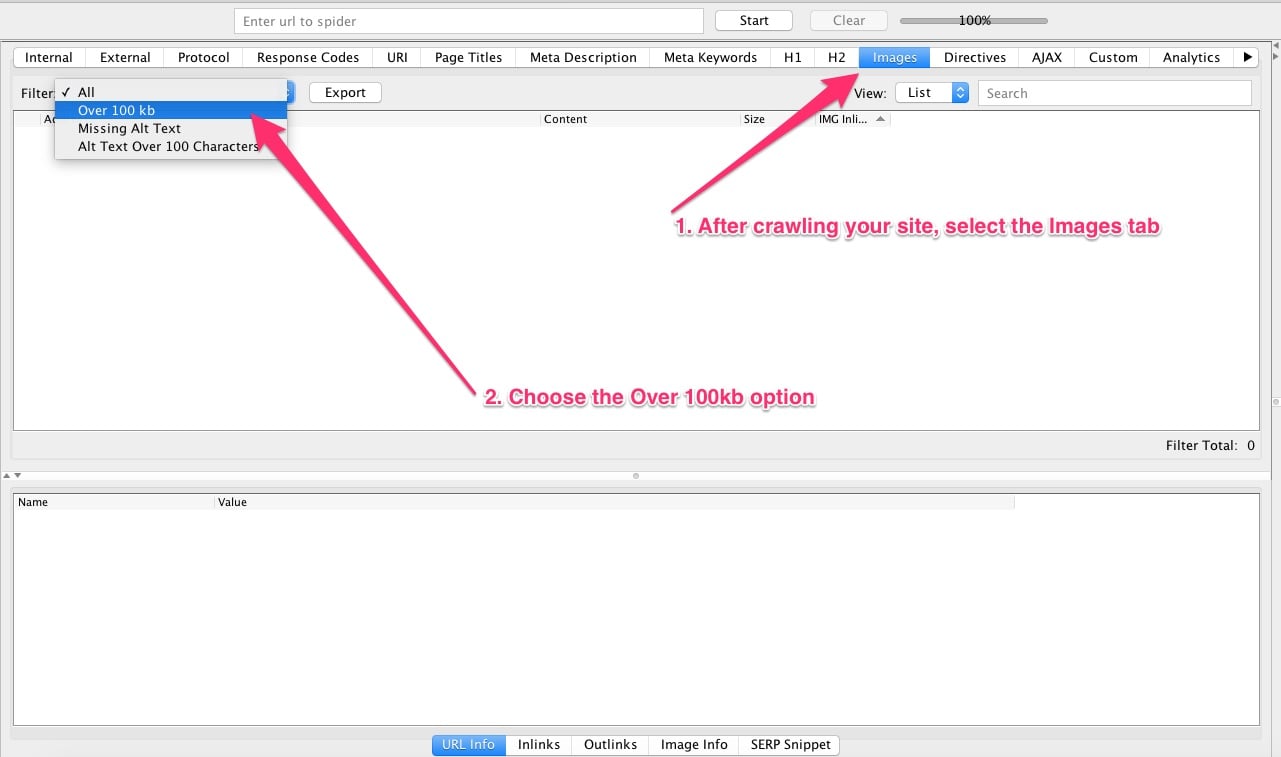

To optimize them, you could first use a tool like Screaming Frog and find all of the images you need to compress.

I’ve already shown you how Screaming Frog works (refer point no 2). In the earlier point, we looked for duplicate content issues. This time, we’ll apply the filter to images and look for the ones that are over 100kb.

Now that you have a list of all the images you need to compress, you can use one of the many online image compression tools.

If you run a WordPress site, you can use a plugin like WPSmush that helps optimize each image you upload to your Media library.

Tip #2 – Remove unused plugins: Another practice that will help keep your site fast is to remove all of the unused products from your web design. This includes both unused themes as well as plugins.

Often site owners just deactivate the stuff they don’t need. While deactivated products don’t load any resources, they do get outdated. Outdated products can pose security threats and potential performance issues.

Tip #3 – Avoid Redirects: Redirects create more HTTP requests and slow down a site. Redirects are commonly used to handle duplicate content issues.

But, if you stay consistent with your URL structure from the beginning, you will create less duplicate URLs and will need less redirects.

Tip #4 – Optimize your above-the-fold area first: If you have been observing the trend, you may have noticed more and more sites using huge images in their fold areas.

Often, these images take very long to load and thereby create a bad user experience.

Before you start optimizing the rest of your site, see how you can make your fold area load faster on search engines.

Other issues that cause a slow-loading fold area include requests to third-party widgets, services, stylesheets and more. To handle all of these issues, load your main content before loading other elements, like the sidebar.

Also, use inline styling in cases of small CSS files. Try to create only one external stylesheet. Creating multiple stylesheets requires a web browser to download them all before being able to render data.

Tip #5 – Enable compression: Using gzip compression can reduce the size of the transferred response by up to 90%.

To enable gzip compression on your web server, you should reach out to your web host mojave osx downloaden. In most cases, if you do a simple search like [hosting provider] + gzip compression, you will find help.

If you use a managed WordPress hosting, it’s possible that your web host has already enabled gzip compression by default.

Tip #6 – Minify resources: When you run your site through any of the above performance measuring tools, you’ll see that one of the most common suggestions you’ll get would be to minify resources.

To minify resources means to compress your HTML, CSS and JS code.

If you don’t have technical skills, you might need help using the different compressors and services that compress and optimize your code for faster performance.

To minify HTML, you can use a tool like HTML compressor.

HTML compressor requires you to input your site’s source code. It then generates a downloadable HTML file with the compressed code.

To minify CSS, you could use CSS Compressor.

Once you copy your original CSS code and run the compression, CSS Compressor will give you a compressed version of your code. CSS Compressor will also show you the number of bytes that the compressed code saved.

For minifying JavaScript, JSCompress is a good tool. To get your optimized JS code, you will have to copy your original JS code and feed it to the program. Just like CSS Compressor, JSCompress will show you the amount of data that the compression saved.

If you use a default theme or template, your theme could have several files. So, I’ll recommend that you seek help from a developer for optimizing. If you don’t do this with care, you might end up breaking your theme and your site.

A good way to go about it would be to contact the theme store and ask them to show you exactly how you can minify the resources in their theme.

8. Thin content

Shallow pages or pages with thin content are usually looked upon as low-quality content by Google.

People often confuse thin content as sites or pages with little text content. But, that’s now how Google sees it. With thin content, Google means all of the sites that offer little or no original value and little-to-no on-page SEO.

If you scrape content or if you publish nothing but roundup posts, often just rehashing information that is already available, Google might think that you are offering low-quality content on your site.

If Google finds that your site is offering thin content, Google might take action against the specific pages on which the content quality is poor or there might also be a site-wide action.

Google specifically lists the following four types of thin content:

-

Automatically generated content

As you can tell, automatically generated content is content that is created by a program. If instead of writing a post from scratch, you simply copy a post and use a program to spin it, your content will be counted as spammy content.

Google can recognize instances of program-generated content avatars for free.

In the above example, you’re actually creating spun content. However, instances of thin content are not always done with malicious intent but will still affect your search results negatively. For example, if you have a site that has content in English, and later you decide to offer a Spanish version of your site, you might just put your content into a tool like Google Translator to create the Spanish version of your content.

This content will also be considered auto-generated content and Google might look at it as spammy content.

The bottom line: any content that is not created, reviewed or revised by a human being is counted as thin content.

-

Boilerplate content

Almost every blog participates in affiliate programs these days. There’s nothing wrong with this.

However, when many sites start reviewing the same product, they end up publishing the product description. You can’t really avoid using the product description when you’re describing a product as people might want to learn about its specs.

But, this problem becomes severe when Google finds the same content across the entire affiliate network. The product description starts acting like boilerplate text that doesn’t add any value for the users. Simply put, they become instances of duplicate content.

I am assuming that you too participate in some affiliate programs, so I’d suggest that you add your personality and unique perspective every time you promote an affiliate product.

As Google Webmasters puts it, “Good affiliates add value, for example by offering original product reviews, ratings and product comparisons.”

-

Scraped content

Google doesn’t appreciate content scrapping.

If you post no original content on your site and simply re-publish content from other sites, or re-publish slightly modified content from other sites (spun content), Google might take action against you.

If you have to re-publish excerpts or quotes from your favorite sites, you can do so. However, your site can’t offer ONLY REPUBLISHED CONTENT with no original insights.

-

Doorway pages

Doorway pages are pages that are created to rank for specific queries. These pages try to manipulate the search engine rankings by ranking for particular key phrases and redirecting the traffic to the intended page.

To see if a page on your site might look like a doorway page to Google, you should see if the page offers any real value. If so, you’re doing good.

In case you find that the page is only leading the organic traffic to another page on your site, or you’re requiring users to do a particular action (like subscribe to your list) to get value from that page, your page might be considered a doorway page.

Conclusion

Whether you have a few SEO issues or dozens of them, you should try and tackle them one by one. Sure, you may not be able to get to them all correct right away. But, if you ignore them, it will just create more problems in the long run.

I know I used to have problems with page speed on Quick Sprout and I didn’t end up doing anything about it. But once that was fixed I saw a 30% increase in search traffic. That’s a nice gain. But, technically, I should of had that extra traffic for years… I just ignore my load time stats when I shouldn’t have.

I hope you’re now ready to fix some of the most common (and frustrating) SEO issues on your site youtube videos ios downloaden.

Which of these SEO issues are you facing currently? And what other SEO problems do you run into often?